Container technology has come a long way from it’s humble beginnings as a rather esoteric, developer-centric, method to virtualize an operating system (OS). With traditional virtualization software, such as VMware’s ESXi, the hardware itself is virtualized and allows for isolated OS guests, or virtual machine’s (VM). Thus, each VM has it’s own OS running in isolation on simulated hardware. With containers, however, not the hardware but the OS is virtualized and in such a way that allows for just the application and it’s dependencies alone to run in an isolated process. So instead of 100s of VM’s running 100s of OS’s with their own configurations and dependencies, you instead have a single OS and containerized definitions of applications and everything they need.

Containers allow for much greater portability, consistency, and ultimately developer productivity which is why they’re now at the center of application modernization and digital transformation initiatives. On Microsoft Azure, containers are a first class citizen and the new Azure Kubernetes Service (AKS) offering is fully managed, scalable, and built directly into the Azure fabric itself. AKS makes it simple to deploy Kubernetes clusters and then operate them, allowing developers to focus on the applications, all while leveraging Azure’s security, scale, and variable billing for efficiency.

So what’s the best way for you to enable your development teams with AKS? How do you keep tabs on the environment while still allowing for self-service and the native workflows of AKS? Depending on the nature of your applications and requirements, you’ll want to approach your environment accordingly. But in this example, we’ll focus on the basic and what you need to safely extend a basic container capability to your teams. In a later post we’ll focus on enterprise scenarios with deeper requirements for management and security.

The assumption is that IT ops will provision the infrastructure and container platform, providing dev with an abstraction layer enabling those groups to connect and use their own tools and interact with the Azure service through the supported channels.

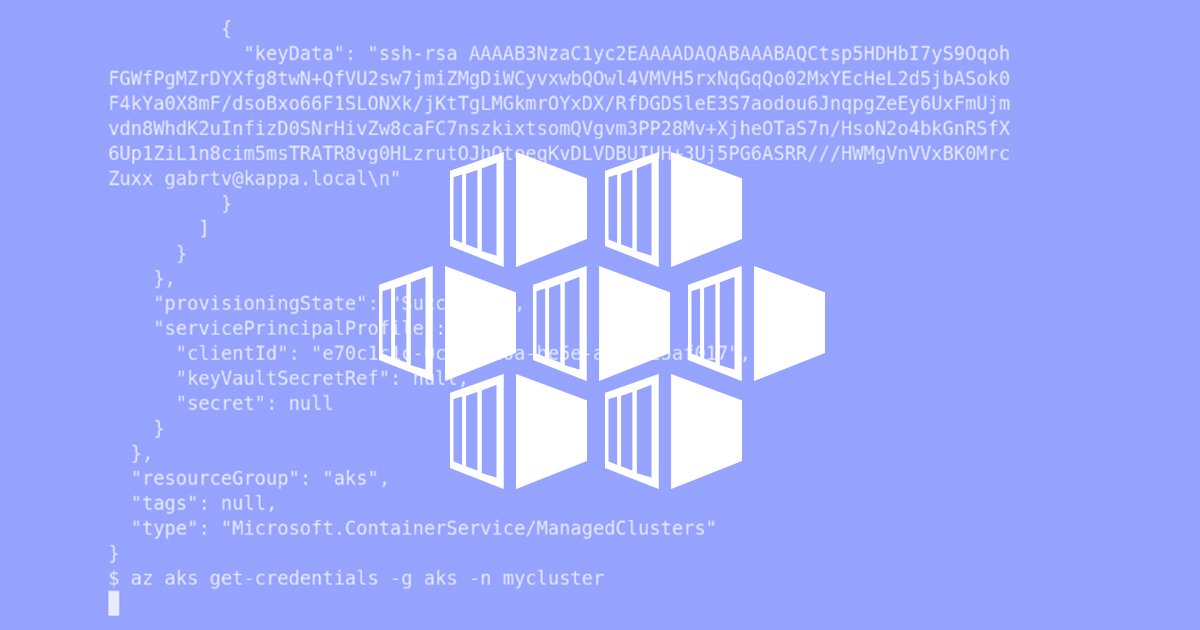

Azure CLI and provisioning the cluster

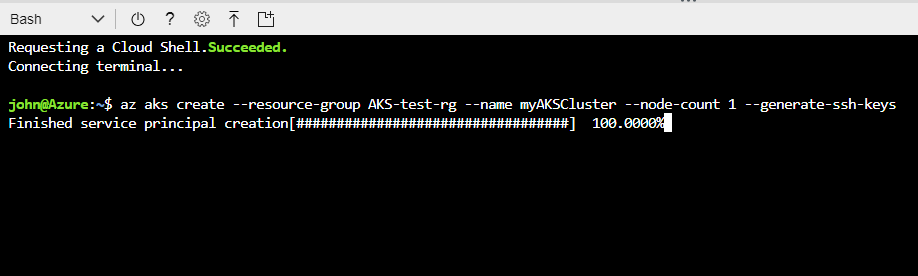

Start off by launching Azure Cloud Shell, which is a powerful interactive shell experience that’s built directly into Azure. From there you can choose Bash or Powershell as your shell experience. For this we’re going to use Bash and Azure CLI, which you can run at the Bash prompt.

The command above will deploy a basic, single node Kubernetes cluster. At a later point, you can easily scale the nodes via the portal or Azure CLI but we’ll start with the one node for now. Notice the “az aks create” command automatically creates a service principal in your Azure AD.

Once the deployment is complete you should have a running instance of AKS.

Setup Azure Container Register (ACR)

ACR is a managed registry of your private Docker images. It’s a key component of a secure, best-practices container environment.

To setup an ACR you can just run this quick command in cloud shell.

az acr create –resource-group AKS-test-rg –name opstest –sku Basic

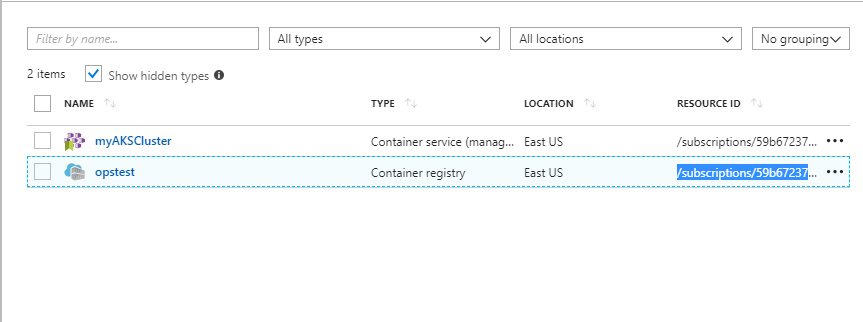

Once that’s setup you can go to your resource group in the portal and see the resources. Make sure ‘Resource ID’ is included in your columns and select the ‘Resource ID’ for the opstest ACR you created.

Now, you can configure authentication to the AKS cluster.

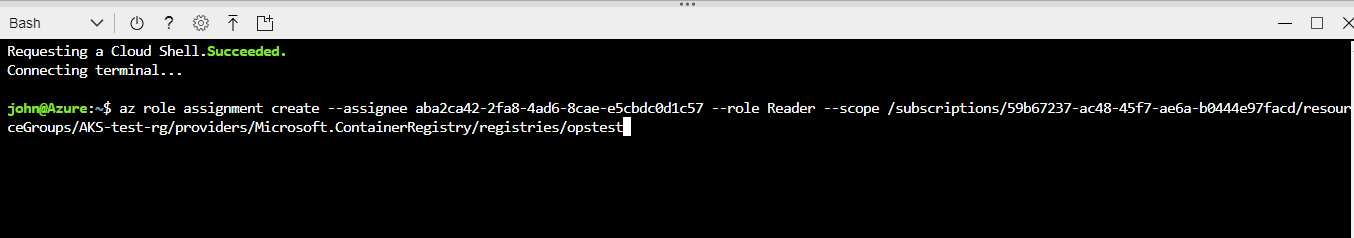

In this example we run the following command to connect the cluster connected to the private container registry.

az role assignment create –assignee aba2ca42-2fa8-4ad6-8cae-e5cbdc0d1c57 –role Reader –scope /subscriptions/59b67237-ac48-45f7-ae6a-b0444e97facd/resourceGroups/AKS-test-rg/providers/Microsoft.ContainerRegistry/registries/opstest

Understand the infrastructure AKS provisions

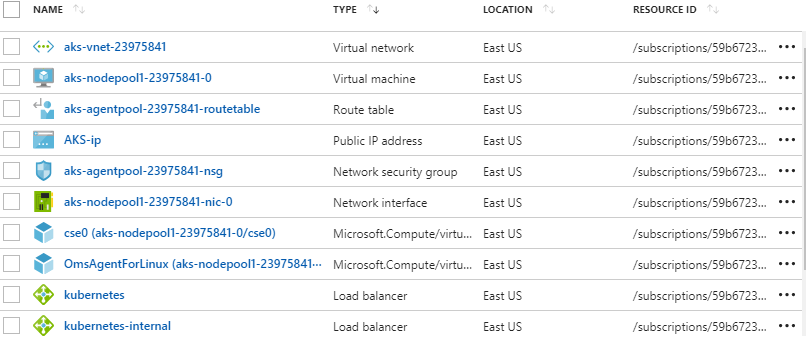

Even though you see a simple AKS resource in the Resource Group, the main cluster’s infrastructure is actually deployed into a new Resource Group that’s created in addition to the one with the AKS service. If you search for your Resource Group’s name in the portal you’ll see the one created by AKS in addition to the one you created. It’s easy to miss this and think that everything is tucked nice and tight under the Azure covers, but that’s definitely not the case.

As you see above, the AKS infrastructure Resource Group has a lot of critical resources in it that are now part of your environment. If the container cluster needs to interact with other apps or services (which is almost always the case) you’ll need a load balancer and an IP address. With connectivity and access to the outside world, it’s critical that ops teams have visibility into the configuration posture of the AKS infrastructure.

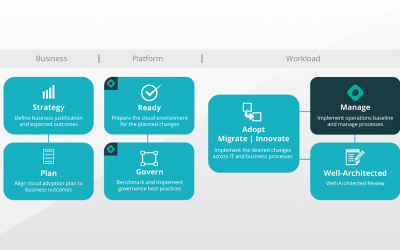

Keeping Control with Helm

When it comes to IT operations, teams generally don’t get to spend a lot of their time focused on public cloud. Helm is a simple and automated way for IT to deliver cloud services to the business in an automated and cloud-native way. In this scenario, IT needs an ability to make sure that the scaffolding, network, and security perimeter of this AKS deployment doesn’t drift despite the cluster itself evolving over time. Helm makes this part simple.

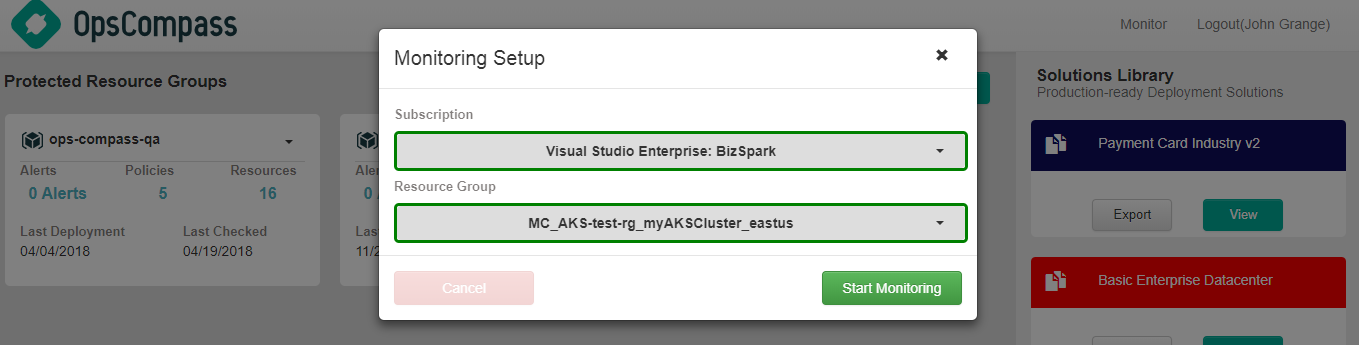

From your dashboard you can select the AKS infrastructure Resource Group and from there the system will talk to the Azure API and get an exact definition of the environment. That definition gets stored in the database as the approved baseline and policies are automatically built from there.

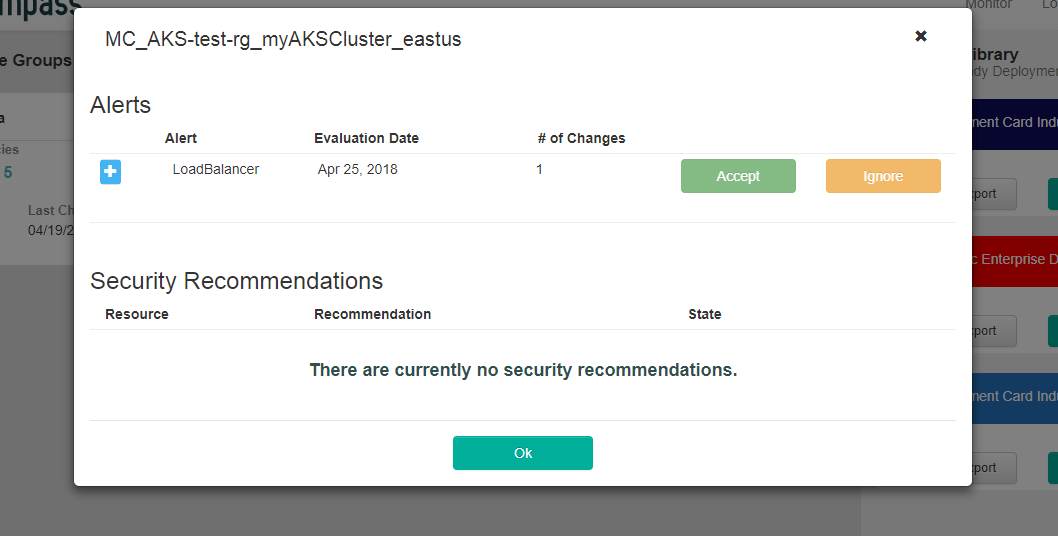

To give your AKS clusters external access to other data sources and systems, a load balancer is deployed with specific configurations that expose the Kubernetes service. Rules can then be created to open up access from particular networks on particular IP’s. In the cloud, these changes happen in real time and Helm captures those important changes automatically and right away.

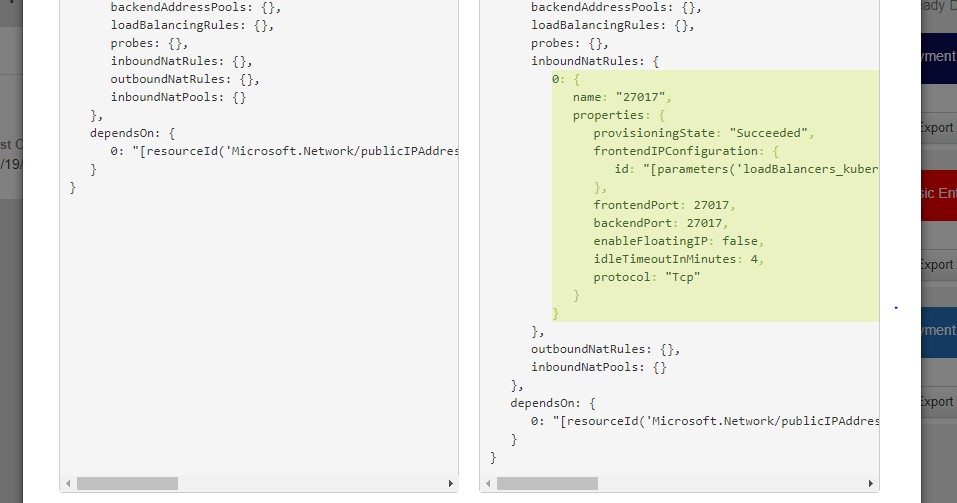

In the example above, Kubernetes was configured with an ingress controller as well as internal access to other apps on the subnet. These changes in Kubernetes can manifest themselves in Azure Load Balancer configuration changes that impact your overall security posture.

The alert can be expended and you can quickly see the details and the key configuration that changes is highlighted in green. By approving the alert, a new policy is automatically created or you can hold it to go investigate further.

Conclusion

Azure has a powerful container offering in AKS, but despite it being a managed service (it truly is from an integration and platform perspective), there’s still critical infrastructure components that must be accounted for. The right tools can make all of the difference, so being able to establish and maintain an infrastructure baseline without a lot of intervention, actually helps development move faster.